First week of work and T Minus 1 week until Hartree HPC summer school with practically no knowledge of software programming, I used the time to learn C by completing Project Euler problems and then converting my serial programs to run in parallel. Some of these can be found here.

On the following Saturday I made my way up to STFC Daresbury and now thinking about it, it’s like Embecosm’s been touring me around all the European science centres, after our talk at CERN last October.

Of all the people on the course I was one of the three people who did not have or was not working towards a PhD. Although I did not do some of the advanced nonlinear algebra, I still picked up lots of knowledge about HPC systems and how to program them.

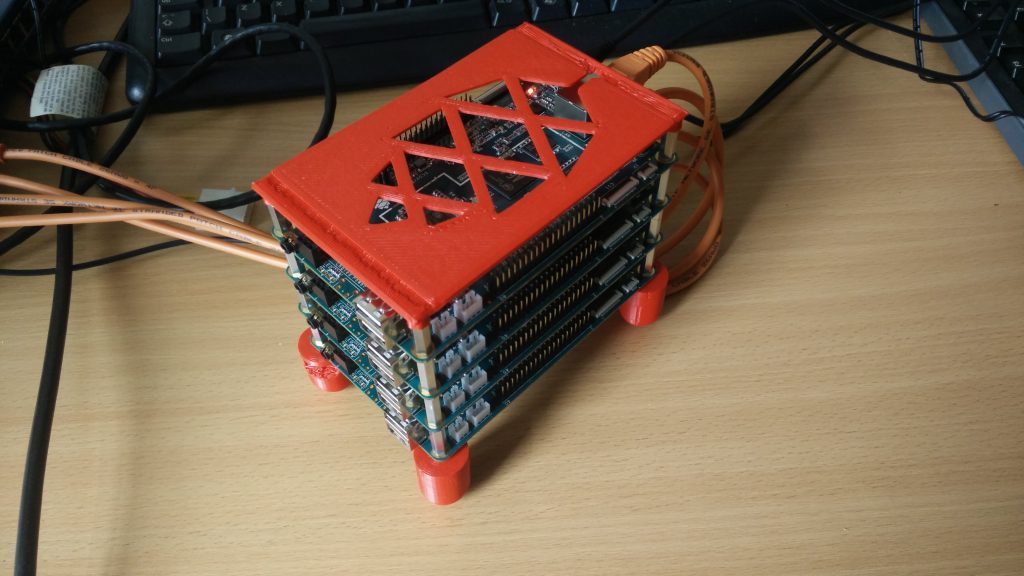

The week I got back, I scavenged around the office and created a cluster for the four Pine64 boards we had.

Pine, ancient email client or soft-wood decking? In this case neither and instead a small board computer to rival the like of the well-known Raspberry Pi. We chose the Pine64 because of it’s ideal features: a 64 bit ARM processor, 2GB RAM and Gigabit Ethernet. However I’ve encountered problems due to our choice of a recently released, little known small board ARM computer, whereas if we’d chosen a Pi instead there’d of been lots of support and “How-to Raspberry Pi” articles.

To create the enclosure I used the humble M3 20mm spacer and our well-loved 3D Printer. We printed red accessories to protect the Pines, but more importantly the tables (which may or may not have gained a hole in which was not there when I started). We have our Pine connected via Gigabit Ethernet to a nearby switch and via this to a shared drive on one of our servers.

We originally had problems with the Pine64’s mac addresses as they come preset in a config file on the Xubuntu image from Pine64, so they all conflicted. Therefore we chose up our own, only to later find out they’re printed on the bottom of the boards. With a simple installation of OpenMPI and the use of an ‘mpi hostfile’ we could run C programs in parallel on all 4 of our original machines, making good use of all 16 cores to print “Hello, world”.

After being greeted enough times to infuriate even a doorman, we decided that we now wanted to benchmark the Pines. After much configuration and an odd workaround we could run the Linpack benchmark: with our initial 4 boards, at peak we could get 5.7 Gflops, which would place us in the top 100 supercomputers up until 1994 and in the top 500 until 1997.

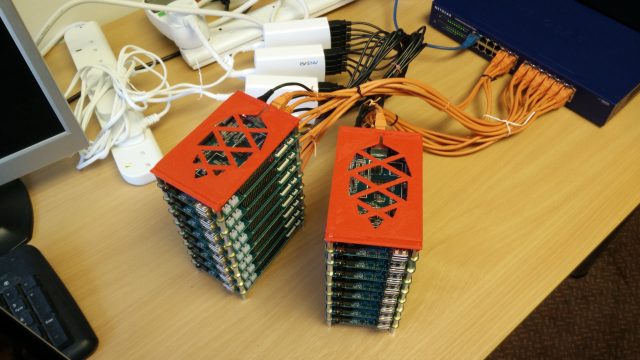

Over the next few weeks the scavenged parts were slowly returned to their original owners to be replaced by new parts and joined by a further 12 boards. Whilst waiting for those boards to arrive, I decided to run Linpack in all configurations of boards and cores per board, which started to demonstrate some odd relationships, which you can read about in my other blog post**.

From further analysis of the results I could see a linear improvement in cores and Gflops, so I predicted that with 16 boards we’d get a peak of 22.5GFlops.

After another few weeks the remaining 12 pines turned up.

It took me only a couple hours to setup the 12 new boards, though of course it did take the rest of the day to write all the SD cards and assign them out with the correct MAC addresses. Also we now had a problem with switches We originally had bought a 16 port switch, but this meant that we would have at least 1 pine separate from the others, to avoid this issue we decided to instead get a 24 port switch.

Then the time came to benchmark all 64 cores. This did not run as smoothly as the original 4 did, with the largest benchmark running for 3 hours. I had expected my benchmark script to run over the weekend, but unfortunately it did not. Our best result was about 20Gflops — well below our estimate — and demonstrating that the increase in performance was not as linear as we originally thought. However, 20 Gflops still puts us on the top500 until when I was born in 1999, and within the top 10 supercomputers in 1993, and all for less than the cost of the latest iPhone.

The TSERO project aims to reduce energy consumption for HPC systems. As a follow-up to the MAGEEC project Embecosm are creating an energy efficient compiler for supercomputers, however the energy measurement boards used for obtaining energy data requires physically intercepting the power supply, with a risk of damaging the hardware, therefore the pine cluster acts as a HPC replica which Embecosm can afford to break.

Whilst waiting for the benchmarks to run I went back to the AAP FPGA implementation i worked on last year, as I had just entered it into the BCS open source project contest. Whilst trying to get the UART working again I encountered a bug, so I had to quickly re-familiarised myself with the code and fixed it.

It’s been another great summer at Embecosm. I have learnt how to SSH, create bash scripts, run benchmarks and to present results. I had my first proper experience of software programming, albeit only to make programs run in parallel, like the superior Verilog.